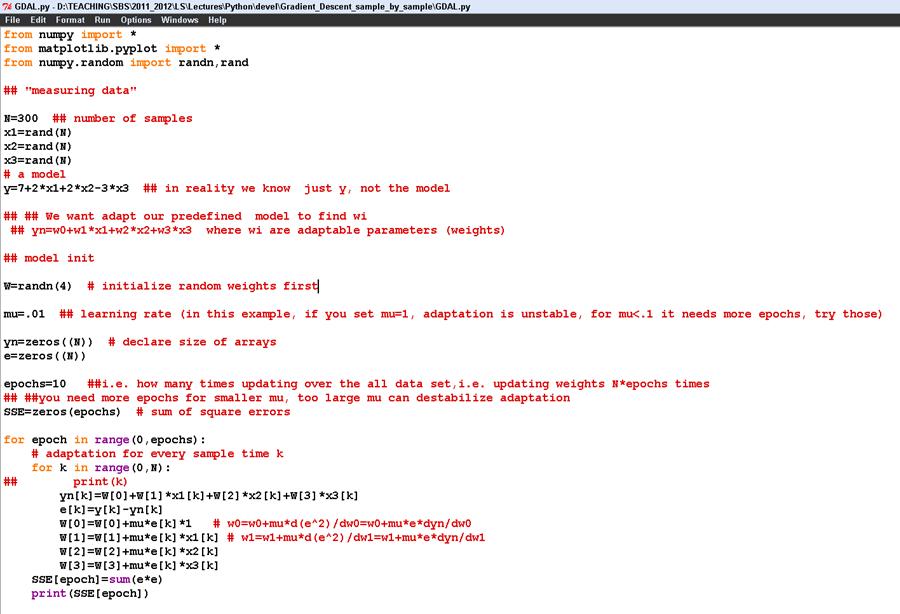

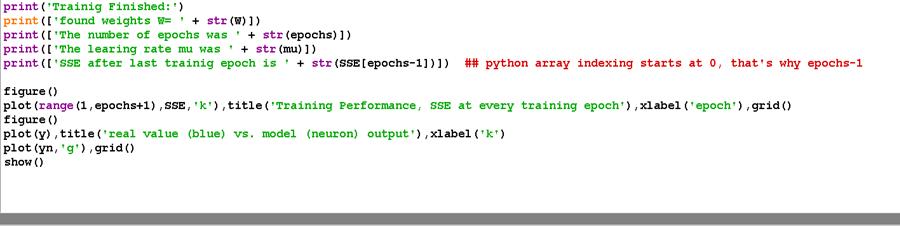

Example of a linear model adapted by gradient descent

(we could use least squares directly, but this algorithm can be extended for nonlinear models and does not use inverse matrix)

Fundamental gradient descent rule:

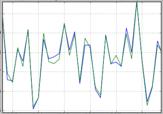

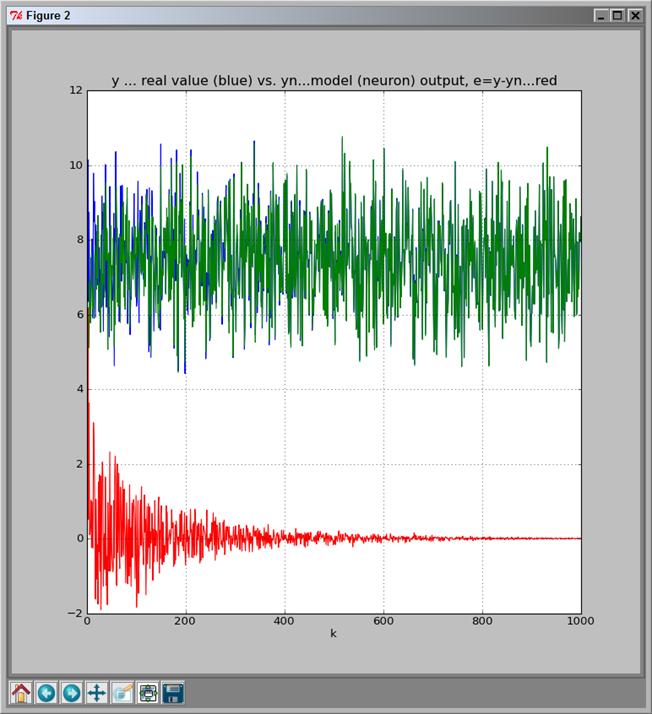

Single run of adaptation on longer data (1000 samples) – model approaches real value

μ=.1

error e=y-yn model model

![]()

![]()

![]()

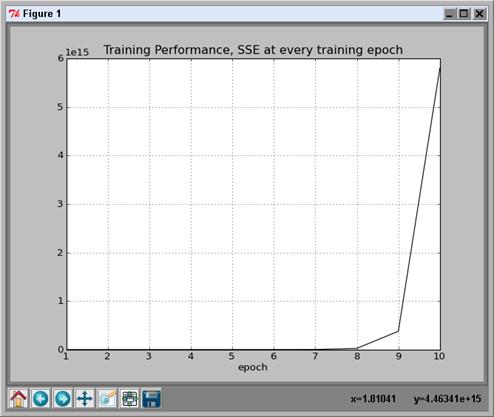

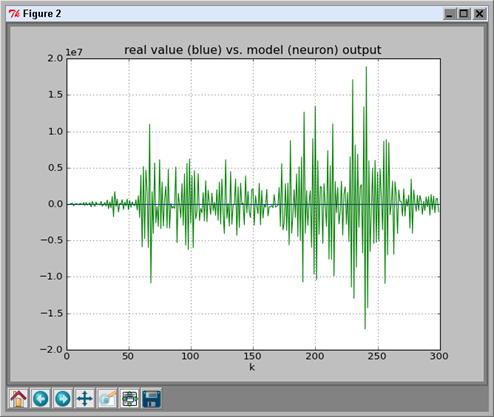

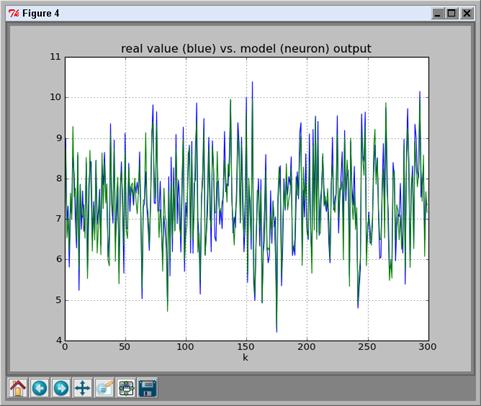

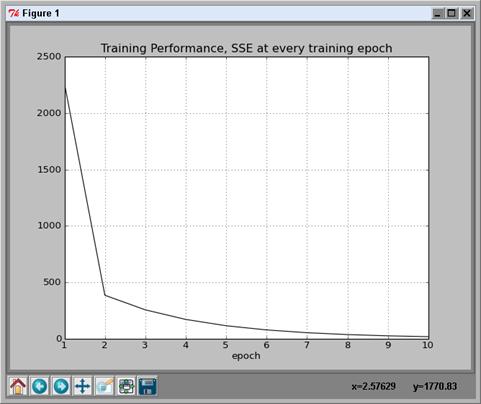

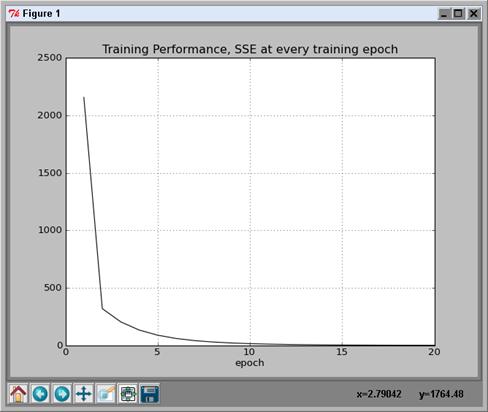

Adaptation in Epochs

epochs=10

μ =.01

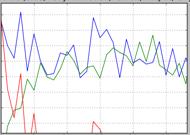

When the learning rate m is chosen too small, the adaptation needs more

epochs (if it can work for the data at all).

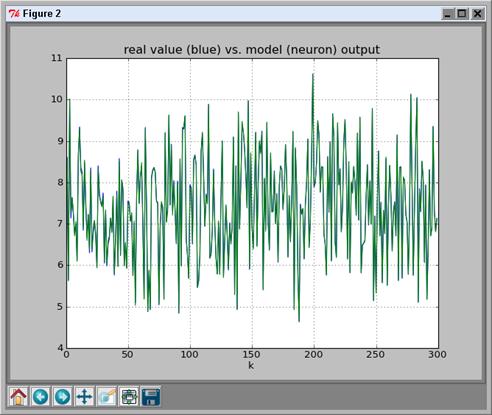

epochs=20

μ =.01

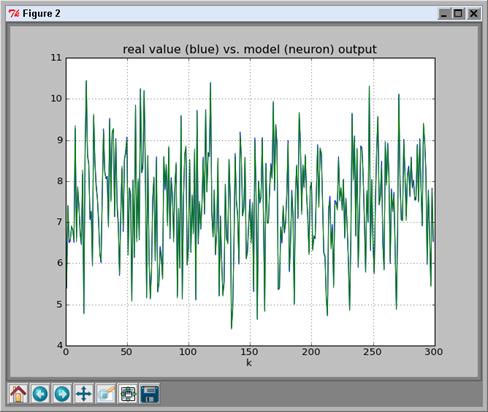

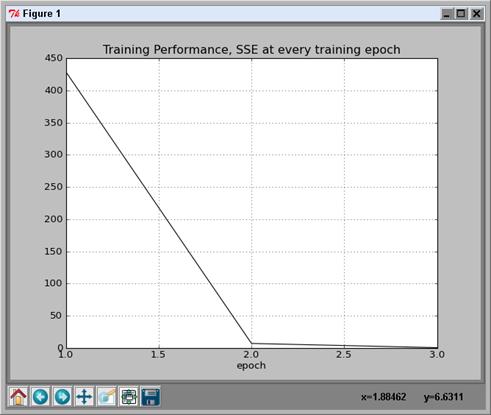

epochs=3

μ =.1

When the learning rate m is chosen too big, the adaptation becomes unstable.

μ =1 !

epochs=10